NVIDIA Jetson 软件的工作原理

NVIDIA Jetson 软件是用于在边缘构建、部署和扩展人形机器人及生成式 AI 应用的旗舰平台。它支持全系列 Jetson 模块,为从原型开发到量产提供统一且可扩展的基础。NVIDIA JetPack™ SDK 赋能实时传感器处理、多摄像头追踪,以及如操作和导航等先进机器人功能,集成于强大的 AI 生态系统之中。开发者可借助诸如 NVIDIA Holoscan(传感器流式处理)和 Metropolis VSS(视频分析)等集成框架。通过 NVIDIA Isaac™ 机器人工作流程,包括像 NVIDIA GR00T N1 等基础生成式 AI 模型,Jetson 软件为机器人实现快速、精准、变革性的 AI 赋能和规模化部署提供支持。

探索 NVIDIA Jetson 软件和资源

JetPack SDK

NVIDIA JetPack SDK 是一套完整的软件套件,用于在 NVIDIA Jetson 平台上开发和部署 AI 驱动的边缘应用。

Holoscan 传感器桥接器

NVIDIA Holoscan 传感器桥接器将边缘传感器连接到 AI 工作流,以实现实时、高性能的传感器数据处理。

Jetson AI 实验室

Jetson AI Lab 由 NVIDIA 工具和社区项目提供支持,激发了机器人和生成式 AI 领域的创新和动手探索。

NVIDIA Isaac

NVIDIA Isaac 平台提供 NVIDIA® CUDA® 加速库、框架和 AI 模型,用于构建自主机器人,包括 AMR、机械臂和人形机器人。

NVIDIA Metropolis

使用 NVIDIA Metropolis 为智慧城市、工厂和零售业开发和部署视觉 AI 应用,并在边缘进行实时视频分析。

Holoscan SDK

使用 NVIDIA Holoscan 构建实时边缘 AI 应用,将传感器数据串流到 GPU,以便在医疗健康和生命科学等行业实现即时推理。

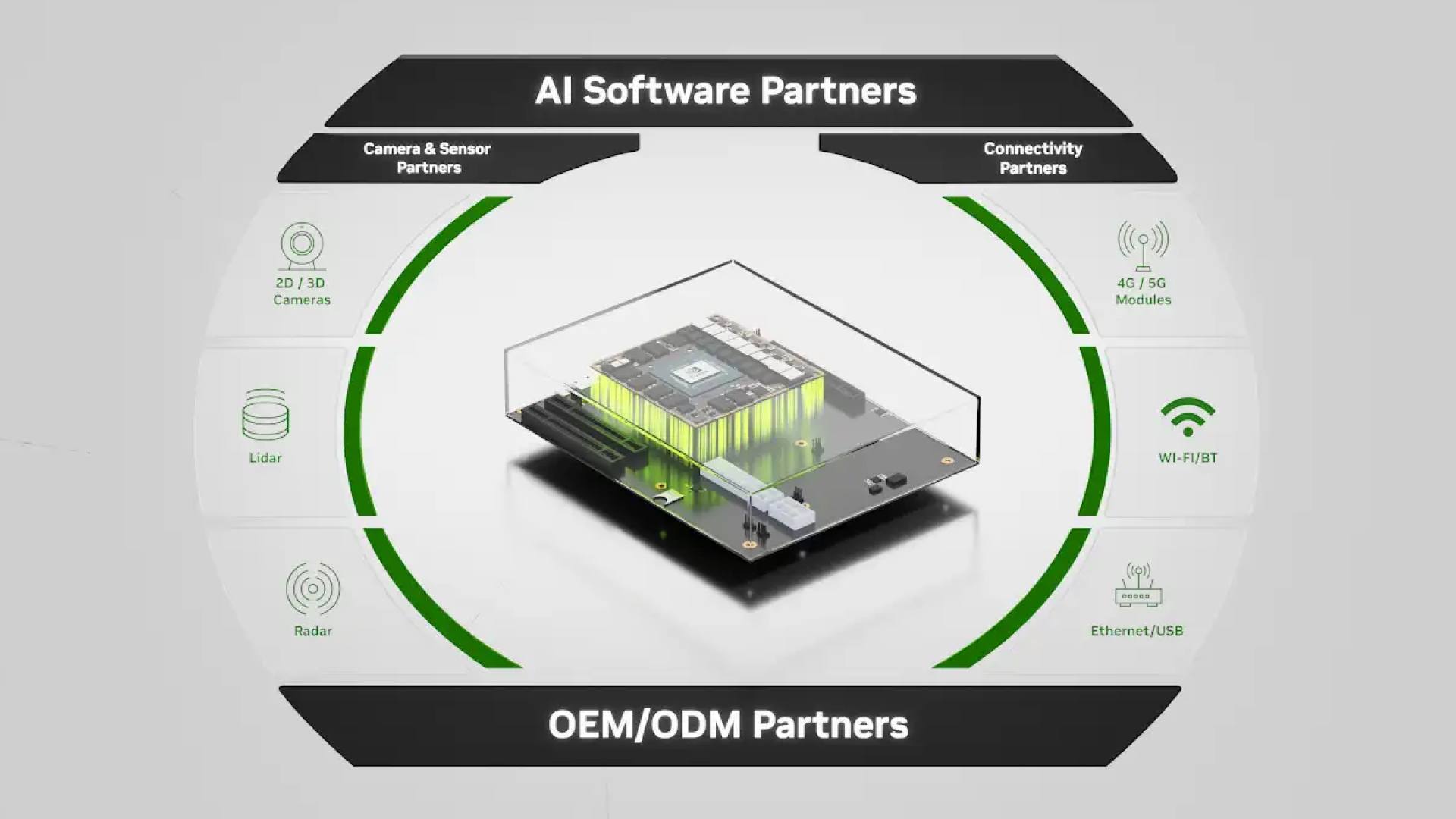

NVIDIA Jetson 合作伙伴生态系统

NVIDIA Jetson 生态系统提供全面的产品和服务,包括 AI 软件、开发工具以及服务器、边缘设备和工业 PC 等硬件解决方案。这些解决方案支持从机器人和制造到零售、运输和医疗健康等行业,还提供商用和坚固耐用的选项。