GPU Gems 3

GPU Gems 3 is now available for free online!

The CD content, including demos and content, is available on the web and for download.

You can also subscribe to our Developer News Feed to get notifications of new material on the site.

Chapter 2. Animated Crowd Rendering

Bryan Dudash

NVIDIA Corporation

With game rendering becoming more complex, both visually and computationally, it is important to make efficient use of GPU hardware. Using instancing, you can reduce CPU overhead by reducing the number of draw calls, state changes, and buffer updates. This chapter shows how to use DirectX 10 instancing with vertex texture fetches to implement instanced hardware palette-skinned characters. The sample also makes use of constant buffers, and the SV_InstanceID system variable to efficiently implement the technique. With this technique, we are able to realize almost 10,000 characters, independently animating with different animations and differing meshes at 30 frames/sec on an Intel Core 2 Duo GeForce 8800 GTX system, as shown in Figures 2-1 and 2-2.

Figure 2-1 A Crowd of Characters Animated Using Skinned Instancing

Figure 2-2 A Close-up Shot of the Animated Crowd

2.1 Motivation

Our goal with this technique is to use DirectX 10 efficiently to enable large-scale rendering of animated characters. We can apply this method to crowds, to audiences, and generally to any situation that calls for drawing a large number of actors, each with a different animation, and distinct mesh variations. In such scenarios, some characters will be closer than others, and thus a level-of-detail (LOD) system is important. With instancing, game designers can realize large, dynamic situations previously not possible without pre-rendering or severely limiting the uniqueness of the characters in the scene.

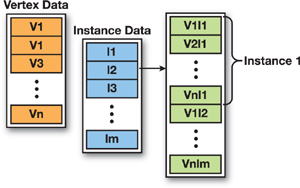

2.2 A Brief Review of Instancing

In this chapter, we assume a basic understanding of instancing; however, let's review the fundamentals. The term "instancing" refers to rendering a mesh multiple times in different locations, with different parameters. Traditionally, instancing has been used for static mesh objects such as leaves, grass, or other small pieces of mesh geometry that occur in great numbers throughout the scene. This is accomplished by binding a secondary vertex buffer that contains the custom per-instance parameterizations. At render time, the primary vertex buffer is looped over once for each instance, and the secondary buffer is incremented to parameterize each loop over the mesh. See Figure 2-3 for an illustration. In effect, this results in a much larger combined vertex buffer without having to manually create or transfer this buffer.

Figure 2-3 Instancing Basics

Under the DirectX9 API, using instancing is a little tricky, because the API requires an overloaded SetStreamSourceFrequency() function call. In addition, under DirectX 9, we are unable to index into constant memory based on the current instance; therefore, we must encode all the per-instance data into the vertex stream. This proves to be cache-inefficient as well as more difficult to integrate into an existing rendering engine.

In the DirectX 10 API, instancing has been moved to the core of the API. There are new draw functions, DrawInstanced() and DrawIndexedInstanced(), which support drawing multiple copies of a mesh, and vertex declarations now contain a special type to identify attributes that are per instance data (D3D10_INPUT_PER_INSTANCE_DATA).

2.3 Details of the Technique

Traditionally using instancing, we have not been able to render animated objects efficiently. With the advent of DirectX 10, efficient skinned animated instancing became possible.

Skinned instancing is an extension of regular instancing. It renders each character using hardware palette skinning. Instead of using the standard method of storing the animation frame in shader constants, we encode all frames of all animations into a texture and look up the bone matrices from that texture in the vertex shader. Thus we can have more than one animation, and each character can be in a different frame of its animation.

We encode the per-instance parameters into a constant buffer and index into that array using the SV_InstanceID.

To achieve mesh variation per instance, we break the character into submeshes that are individually instanced. Such meshes would be different heads, for example.

Finally, to avoid work animating characters in the far distance, we implement an LOD system with lower-resolution polygonal mesh subsections. The decision of which LOD to use is made per frame on a per-instance basis.

What follows is a simple rendering flow. For details on each step, see the corresponding subsections of this chapter.

CPU

- Perform game logic (animation time, AI, etc.).

- Determine an LOD group for each instance and populate LOD lists.

- For each LOD:

- For each submesh:

- Populate instance data buffers for each instanced draw call.

- For each buffer:

- - Call DrawInstanced() on the submesh.

- For each submesh:

GPU

Vertex Shader

- Load per-instance data from constants using SV_InstanceID.

- Load bone matrix of appropriate animation and frame.

- Perform palette skinning.

Pixel Shader

- Apply per-instance coloration as passed down from vertex shader.

- (Optional) Read a custom texture per instance from a texture array.

2.3.1 Constants-Based Instancing

The technique does not use the traditional method of encoding the per-instance data into a separate vertex stream. In testing, we found that reading per-instance data from a constant buffer using an index per instance was faster due to better cache utilization of the vertices. With the traditional method, vertex attributes increase by the number of per-instance data attributes. We chose instead to put the low-frequency data into constant memory.

SV_InstanceID

Under DirectX 10, there are a number of useful variables that can be generated automatically by the GPU and passed into shader code. These variables are called system variables. All system variables have a semantic that begins with "SV_". SV_InstanceID is a GPU-generated value available to all vertex shaders. By binding a shader input to this semantic, the variable will get an integral value corresponding to the current instance. The first index will get 0, and subsequent instances will monotonically increase this value. Thus every instance through the render pipeline gets a unique value, and every vertex for a particular instance shares a common SV_InstanceID value.

This automatic system value allows us to store an array of instance information in a constant buffer and use the ID to index into that array. Because we are injecting per-instance data into constant buffers, we are limited in the number of instances we can render per draw call by the size of the constant memory. In DirectX 10 there is a limit of 4,096 float4 vectors per constant buffer. The number of instances you can draw with this size depends on the size of the per-instance data structure. In this sample, we have the per-instance data shown in Listing 2-1.

As you can see, in this sample each instance takes up five float4 vectors of constant memory, which means we can store a maximum of 819 instances. So we split each group of instanced meshes into N buffers, where N = Total Instances/819. This is a very acceptable number, and it means that if we were to draw 10,000 meshes, it would take 13 draw calls. There is a difference in CPU overhead between 1 and 13 draw calls per frame, but the difference between 13 and 819 is much larger. Each draw call removed allows a reduction in CPU overhead and a possible increase of performance. Thus, there is often little effective difference in final frame rate between 1 and 13 draw calls.

Example 2-1. Instance Data Structure and Constant Buffer

struct PerInstanceData

{

float4 world1;

float4 world2;

float4 world3;

float4 color;

uint4 animationData;

};

cbuffer cInstanceData { PerInstanceData g_Instances[MAX_INSTANCE_CONSTANTS]; }On the CPU side, the data looks like Listing 2-2.

Example 2-2. C++ Instance Data Structure

struct InstanceDataElement

{

D3DXVECTOR4 world1;

D3DXVECTOR4 world2;

D3DXVECTOR4 world3;

D3DXCOLOR color;

// Offset in vectors (texels) into the whole data stream

// for the start of the animation playing

UINT animationIndex;

// Offset in vectors (texels) into the animation stream

//

for

the start of the frame playing UINT frameOffset;

UINT unused1;

// pad

UINT unused2;

// pad

};2.3.2 Palette Skinning Using an Animation Texture

In traditional matrix palette skinning, you encode the transform matrices into vertex shader constants. In our case, each character has a different pose, and possibly a different animation. We use a texture to store the animation data because the amount of data required for all animation frames is too large to fit into constant memory. All DirectX 10-class hardware should have sufficient vertex-texture fetch capability to make this a fast operation.

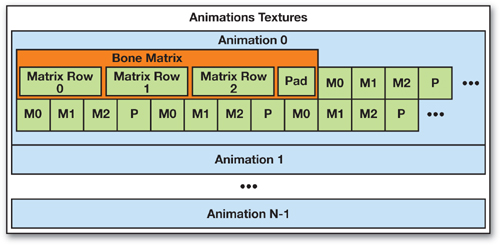

As shown in Figure 2-4, we save each bone matrix for each frame for each animation linearly into a texture, and thus we can read it out in the vertex shader.

Figure 2-4 The Animations Texture Breakdown

Decode Matrices from a Texture

Listing 2-3 shows the function in HLSL that loads the matrix from the animations texture based on the animation offset and bone offset in texels. You can see that we divide and modulus to determine a UV from a linear offset into the animation. Then we point-sample three rows of the matrix and construct a float4x4 (the translation is encoded in w components for each row). The sampling from the animation texture uses the HLSL Load() method, which returns the texel unfiltered, and takes an exact pixel position as input, thus simplifying the lookup process.

Conditional Branching for Weights

As with regular palette skinning, vertices can have up to four bone weights, but not all vertices have four bones active. Thus we make use of conditional branching in the vertex shader to avoid unnecessary texture loads of matrices we won't use. Listing 2-4 shows this technique.

Example 2-3. The loadBoneMatrix() HLSL Function

By restricting g_InstanceMatricesWidth to a power of two, the computational overhead of the addressing logic can be significantly reduced. The power of two restriction allows the shader to use bitwise operations in place of integer divide and modulus operations. With GPUs just as with CPUs, integer divides are more costly than other operations.

// Read a matrix (3 texture reads) from a texture containing

// animation data.

float4x4 loadBoneMatrix(uint3 animationData, float bone)

{

float4x4 rval = g_Identity;

// If this texture were 1D, what would be the offset?

uint baseIndex = animationData.x + animationData.y;

// We use 4 * bone because each bone is 4 texels to form a float4x4.

baseIndex += (4 * bone);

// Now turn that into 2D coords

uint baseU = baseIndex % g_InstanceMatricesWidth;

uint baseV = baseIndex / g_InstanceMatricesWidth;

// Note that we assume the width of the texture

// is an even multiple of the number of texels per bone;

// otherwise we'd have to recalculate the V component per lookup.

float4 mat1 = g_txAnimations.Load(uint3(baseU, baseV, 0));

float4 mat2 = g_txAnimations.Load(uint3(baseU + 1, baseV, 0));

float4 mat3 = g_txAnimations.Load(uint3(baseU + 2, baseV, 0));

// Only load 3 of the 4 values, and decode the matrix from them.

rval = decodeMatrix(float3x4(mat1, mat2, mat3));

return rval;

}Example 2-4. Conditional Branching in the Vertex Shader

VS_to_PS CharacterAnimatedInstancedVS(A_to_VS input)

{

VS_to_PS output;

uint4 animationData = g_Instances[input.InstanceId].animationData;

// Our per instance data is stored in constants

float4 worldMatrix1 = g_Instances[input.InstanceId].world1;

float4 worldMatrix2 = g_Instances[input.InstanceId].world2;

float4 worldMatrix3 = g_Instances[input.InstanceId].world3;

float4 instanceColor = g_Instances[input.InstanceId].color;

float4 finalMatrix;

// Load the first and most influential bone weight.

finalMatrix =

input.vWeights.x * loadBoneMatrix(animationData, input.vBones.x);

// Conditionally apply subsequent bone matrices if the weight is > 0.

if (input.vWeights.y > 0)

{

finalMatrix +=

input.vWeights.y * loadBoneMatrix(animationData, input.vBones.y);

if (input.vWeights.z > 0)

{

finalMatrix +=

input.vWeights.z * loadBoneMatrix(animationData, input.vBones.z);

if (input.vWeights.w > 0)

finalMatrix +=

input.vWeights.w * loadBoneMatrix(animationData, input.vBones.w);

}

}

}A Few Important Points

- The animation texture must be a multiple of four. This allows us to calculate the row only once in the vertex shader and then simply offset along U to get the four lines for each matrix.

- We actually encode the matrix into three lines to save a texture fetch, but to avoid issues with non-power-of-two textures, we add in a pad texel.

- The linear offset in texels to the active animation, and the linear offset within the animation for the active frame, are specified per instance.

- The bone index and bone weight information are stored (as normal) per vertex for matrix palette skinning.

2.3.3 Geometry Variations

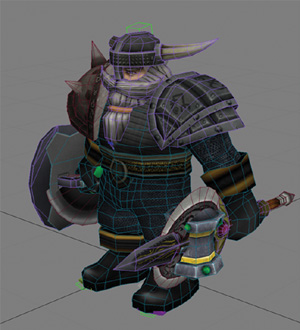

If all characters rendered had the exact same mesh geometry, the user would immediately notice the homogeneousness of the scene and her disbelief would not be suspended. To achieve more variation in character meshes, we break a character into multiple pieces and provide alternate meshes. In the case of this sample, we have warriors with differing armor pieces and weapons. The character mesh is broken up into these distinct pieces, and each piece is instanced separately.

The basic method involves understanding which pieces each character instance contains. Then, we create a list of characters that use a given piece. At draw time, we simply iterate over the pieces, inject proper position information into the per-instance constant buffer, and draw the appropriate amount of instances.

Figure 2-5 is from Autodesk's 3ds Max 9, where we can see that the source mesh contains all the mesh permutations for each character. Notice that all the weapons are included in the same file. They are exported as separate mesh objects but all are bound to an identical skeleton. Thus we can reuse the animations from the character for all the subsections. At load time, the system will generate random permutations of the sub sections to give each character a different appearance. The technique supports as many mesh variations as your artists can come up with. For a real game, you might want to have finer artist control over this (as opposed to random generation), which would require some work on tools or on the export path.

Figure 2-5 The Character Mesh in 3ds Max 9 Showing All Mesh Variations

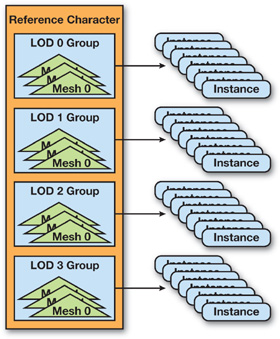

2.3.4 The Level-of-Detail System

Because characters in the distance take up fewer pixels on the screen, there is no need for them to be as highly tessellated as characters closer to the camera. In addition, distant characters do not need to sample from the normal map, or calculate complex lighting. Thus we implement an LOD system to improve performance. The technique for instancing breaks each character into a collection of mesh pieces that are instanced. We can easily create an LOD system by simply adding more pieces to the instancing system. Every frame, each character instance determines the LOD group that it is in by its distance from the camera. This operation happens on the CPU. Then at render time, collections of each mesh piece in each LOD group are drawn. As we iterate through each LOD mesh piece, we consult which instances are in that LOD group and use that piece. Thus we can update the instance data buffers appropriately to render each character at the correct LOD level. See Figure 2-6. We also perform a simple view frustum culling on the CPU to avoid sending thousands of characters behind the camera to the GPU.

Figure 2-6 The Layout of the LOD Data

An additional benefit of an LOD system is to reduce geometric aliasing, which would result if you were to draw a highly tessellated object in the far distance such that vertices overlapped on a pixel.

2.4 Other Considerations

2.4.1 Color Variations

Each instance has a different "base" color, which is selectively applied to the mesh based on the alpha channel of the color map. The artists have painted an alpha channel that represents the amount of custom color to blend in. In the pixel shader, we lerp between the custom instance color and white based on this blend factor. This allows greater variation, and it is possible to define multiple instance colors to be used across the different mesh pieces.

2.4.2 Performance

As with any type of instancing, performance gains are seen on the CPU side. By using instancing, you free up CPU processing time for other operations, such as AI or physics. Thus, the importance of this technique depends on the CPU load of your game.

In general, any instancing technique will shift the load from the CPU to the GPU, which is a good thing, because the CPU can always do more processing of your game data.

Performance also depends on where you set the LOD levels. If all the characters are rendered at the highest LOD, then you can render many fewer characters. But as you bring the lines for far LODs closer to the camera, you may see artifacts. This is a judgment call for the graphics programmer.

Our sample in the instanced case runs at about 34 frames/sec for 9,547 characters on an Intel Core 2 Duo 2.93 GHz, 2GB RAM system with an NVIDIA 8800 GTX at 1280x1024 resolution. This is with the LOD level lines set to 20, 60, and 100 character radiuses, respectively. This setting results in 160 instanced draw calls, compared to 59,726 single draw calls if you were to draw the same character meshes in a more traditional way (albeit inefficiently).

Performance will also depend on the mesh data and the level of variety. You can increase performance with more aggressive use of LOD, and you can improve quality with less aggressive use of LOD. This sample is actually pixel/fill limited with so many characters; however, the balance is close and the GPU is fully loaded. As the resolution lowers, the bottleneck shifts to vertex processing due to the vast numbers of GPU skinned characters.

2.4.3 Integration

Integrating this technique is similar to the integration of a new rendering type. Most likely you would define a crowd class, or a background group of animated characters. The placement of the characters in the group can be specified by artists and used to populate the instance data buffer. Placement could also be defined by more-complex AI.

The mesh data and animation data are exactly the same as for a normal hardware palette skinning implementation. The only difference is that you need to preprocess the animation curves into a collection of bone matrices per frame. This should most likely be a preprocessing step.

2.5 Conclusion

Skinned instancing is an appropriate technique for rendering massive numbers of independently animating characters in real time. It makes use of many key features of the DirectX 10 API. It uses vertex texture fetch to read animation data that is stored in a single texture. It also uses SV_InstanceID to index into a constant buffer containing instance information (position, animation time). Finally, the system supports an easy-to-implement LOD system to allow polygonal/lighting detail to be higher when appropriate and lower in the distance.

2.6 References

Carucci, Francesco. 2005. "Inside Geometry Instancing." In GPU Gems 2, edited by Matt Pharr, pp 47–67. Addison-Wesley.

Dudash, Bryan. 2005 "Technical Report: Instancing." In NVIDIA SDK 9.5. Available online at http://developer.nvidia.com.

Dudash, Bryan. 2007. "Skinned Instancing." In NVIDIA SDK 10. Available online at http://developer.nvidia.com.

Microsoft. 2007. "DirectX Documentation for C++." In Microsoft DirectX SDK (February 2007). Available online at http://msdn.microsoft.com/directx.

- Contributors

- Foreword

- Part I: Geometry

-

- Chapter 1. Generating Complex Procedural Terrains Using the GPU

- Chapter 2. Animated Crowd Rendering

- Chapter 3. DirectX 10 Blend Shapes: Breaking the Limits

- Chapter 4. Next-Generation SpeedTree Rendering

- Chapter 5. Generic Adaptive Mesh Refinement

- Chapter 6. GPU-Generated Procedural Wind Animations for Trees

- Chapter 7. Point-Based Visualization of Metaballs on a GPU

- Part II: Light and Shadows

-

- Chapter 8. Summed-Area Variance Shadow Maps

- Chapter 9. Interactive Cinematic Relighting with Global Illumination

- Chapter 10. Parallel-Split Shadow Maps on Programmable GPUs

- Chapter 11. Efficient and Robust Shadow Volumes Using Hierarchical Occlusion Culling and Geometry Shaders

- Chapter 12. High-Quality Ambient Occlusion

- Chapter 13. Volumetric Light Scattering as a Post-Process

- Part III: Rendering

-

- Chapter 14. Advanced Techniques for Realistic Real-Time Skin Rendering

- Chapter 15. Playable Universal Capture

- Chapter 16. Vegetation Procedural Animation and Shading in Crysis

- Chapter 17. Robust Multiple Specular Reflections and Refractions

- Chapter 18. Relaxed Cone Stepping for Relief Mapping

- Chapter 19. Deferred Shading in Tabula Rasa

- Chapter 20. GPU-Based Importance Sampling

- Part IV: Image Effects

-

- Chapter 21. True Impostors

- Chapter 22. Baking Normal Maps on the GPU

- Chapter 23. High-Speed, Off-Screen Particles

- Chapter 24. The Importance of Being Linear

- Chapter 25. Rendering Vector Art on the GPU

- Chapter 26. Object Detection by Color: Using the GPU for Real-Time Video Image Processing

- Chapter 27. Motion Blur as a Post-Processing Effect

- Chapter 28. Practical Post-Process Depth of Field

- Part V: Physics Simulation

-

- Chapter 29. Real-Time Rigid Body Simulation on GPUs

- Chapter 30. Real-Time Simulation and Rendering of 3D Fluids

- Chapter 31. Fast N-Body Simulation with CUDA

- Chapter 32. Broad-Phase Collision Detection with CUDA

- Chapter 33. LCP Algorithms for Collision Detection Using CUDA

- Chapter 34. Signed Distance Fields Using Single-Pass GPU Scan Conversion of Tetrahedra

- Chapter 35. Fast Virus Signature Matching on the GPU

- Part VI: GPU Computing

-

- Chapter 36. AES Encryption and Decryption on the GPU

- Chapter 37. Efficient Random Number Generation and Application Using CUDA

- Chapter 38. Imaging Earth's Subsurface Using CUDA

- Chapter 39. Parallel Prefix Sum (Scan) with CUDA

- Chapter 40. Incremental Computation of the Gaussian

- Chapter 41. Using the Geometry Shader for Compact and Variable-Length GPU Feedback

- Preface