GPU Gems 3

GPU Gems 3 is now available for free online!

The CD content, including demos and content, is available on the web and for download.

You can also subscribe to our Developer News Feed to get notifications of new material on the site.

Chapter 35. Fast Virus Signature Matching on the GPU

Elizabeth Seamans

Juniper Networks

Thomas Alexander

Polytime

35.1 Introduction

The Internet, with its constantly improving data communications infrastructure, moves vast amounts of data every second. To process data at a low latency and high throughput, networking equipment vendors use dedicated hardware. At the 4 Gb/s to 40 Gb/s level, processing is done using expensive, purpose-built application-specific integrated circuits. From the 100 Mb/s to 4 Gb/s level, processing is usually performed using a combination of general-purpose CPUs, network processors, and field-programmable gate arrays (FPGAs). Processing throughput below 100 Mb/s is performed using either an x86 CPU or an embedded processor.

Specialized network processors and FPGAs are flexible, and new algorithms and services can be deployed on edge routers or security appliances built using either technology. However, the low unit volumes force manufacturers to price these devices at many times the cost of producing them, to recoup the R&D dollars.

In this chapter we explore the potential of using the parallelism of commodity-priced graphics processors as network coprocessors. Although GPUs are designed principally for real-time 3D graphics, network processing and graphics processing have a lot in common.

Network processing and graphics processing share these important characteristics:

- They involve highly data-parallel algorithms, with requirements for a small but very high speed memory subsystem.

- They offer the ability to use large numbers of multithreaded processors to hide DRAM latency.

- They offer a fast interconnection bus to stream large volumes of data.

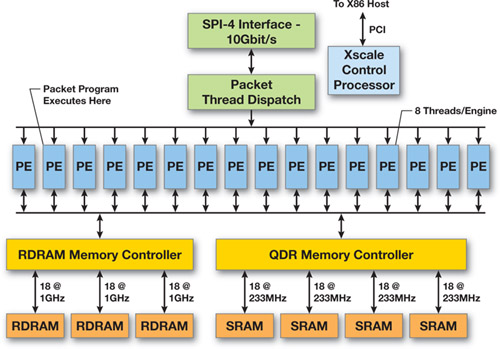

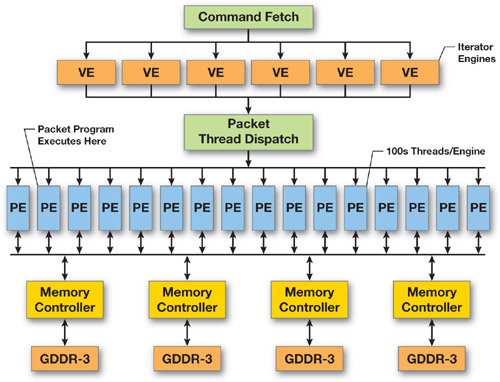

To illustrate this comparison, Figures 35-1 and 35-2 show the main attributes of a high-end network processor from Intel (IXP2800) and a high-end GPU from NVIDIA.

Figure 35-1 An Intel IXP2800 Network Processor

Figure 35-2 A High-End NVIDIA GPU

The motivation for this work is to see if the GPU has sufficient functionality to act as a network processor and, if it does, to leverage its commodity pricing to build routing and security appliances with a disruptive price/performance profile. Network security is probably the most demanding of data packet processing operations. Every byte, and potentially a combination of neighboring bytes, has to be processed multiple times. The processing involves bit manipulations and many lookups into several multi-megabyte databases. For example, virus detection requires pattern matching at multi-gigabit rates to achieve acceptable throughput.

To address this performance requirement, we present a prototype virus scanning system partially implemented on a modern graphics processor. The results we obtained indicate that GPUs now have the necessary computing, memory, and interface functionality to be compelling network offload engines.

35.2 Pattern Matching

Network security processing for virus detection relies extensively on pattern matching to determine if the data in packets is harmful and how to process the information. Pattern matching algorithms analyze pieces of the network data stream and compare data patterns (signatures) against a database of known viruses. These signature patterns can be fairly complex, composed of different-size strings, wild characters, range constraints, and sometimes recursive forms.

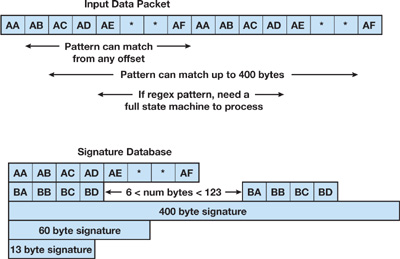

Typically, the processing and memory bottleneck in network processing engines is the performance of pattern matching computations at gigabit rates over many tens of thousands of signatures. The different signature lengths, wild characters, and range constraints require each input byte to be read and processed many times. In addition, many bytes of state are kept in flight while the matching operation is being done. Figure 35-3 gives an example of comparing the input data against a virus signature.

Figure 35-3 Matching a Virus Signature Against Packet Data

To achieve sufficient processing throughput for high-performance networks, data streams must be analyzed in parallel using intrapacket scanning (bytes within a packet are processed in parallel) or by using the interpacket approach (multiple packets are processed simultaneously). These methods provide different benefits and limitations, and we experiment with both types of parallelism for our GPU-based virus detection system.

35.2.1 A Data-Scanning Library

We developed a library to support applications with data objects, such as packets or files, of varying sizes and requirements. The library accepts a data buffer and a data object description from the application. It inspects the data buffer and compares it against a static database of fixed-character, or exact-match, signature strings as a first step toward supporting full regular-expression signatures. Our library returns information to the application about any matches it detected.

We used the open-source antivirus application ClamAV, along with its virus signature database, as a demonstration application. ClamAV detects virus signatures in files of various types and sizes. Its signature database holds around 30,000 exact-match virus signatures whose sizes range between a few bytes to several hundred bytes. In the original algorithm, ClamAV processes one file at a time before it begins the next file, using the following steps:

- Determine the file type.

- Perform any necessary preprocessing (such as decompression).

- Read the file data into a data buffer until the buffer is full or the end of the file is reached.

- Find the signature matches for fixed-character signature strings.

- Repeat steps 3 and 4 until the end of the file is reached.

- Report the result for the file.

These steps place specific requirements on any virus detection library, including the stipulation that the library use GPU acceleration. In addition the library must be able to handle files that are much smaller than the data buffer, or file memory footprints that span multiple data buffers. Also, it must properly identify signature matches for different file types.

35.3 The GPU Implementation

As a data-parallel processor, the GPU excels at algorithmic tasks with regular, fixed-size input and output data sets. Therefore, to best utilize the GPU for virus detection, we need to focus on highly parallel portions of the pipeline and use the CPU for morevariable and serial execution tasks. In this way, we employ the GPU as a high-speed filter before completing the signature-matching work on the CPU. The GPU uses a small number of bytes to determine whether there is a likely match against a signature in the database, and the CPU verifies any possible matches.

The GPU maintains a version of the signature database, which it uses to detect possible signature matches in the input data. We create the GPU database by identifying 2 consecutive bytes from each signature and using them as an index into a 64,000-entry array, where each index is mapped to, at most, one signature. The array stores the 4 bytes in the signature immediately preceding the 2-byte key. A thread on the GPU reads a single 2-byte value from the input data and uses it as an index into the database array. It fetches the 4-byte tag and compares the tag against the 4 bytes of data immediately preceding the 2-byte value in the input data. In this way, every consecutive 2-byte value from the input data is read and compared to the signature database.

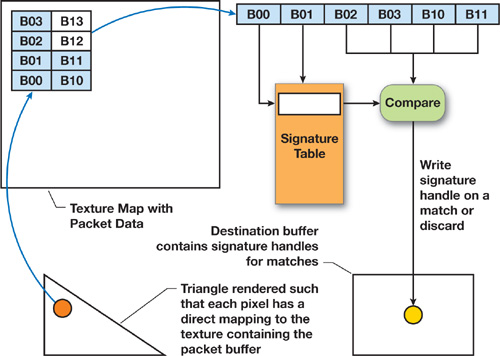

Figure 35-4 shows how a GPU thread performs the packet filtering operation. Listing 35-1 gives the Cg code for reading 2-byte values from the input buffer and generating 4-byte tags for comparison against the database array. Listing 35-2 gives the Cg code for signature pattern matching on the GPU where, if there is a match, we write the corresponding bytes to the output buffer.

Figure 35-4 Filtering Packet Data on the GPU

Example 35-1. Cg Code for Reading Bytes from the Packet Buffer and Generating Tags

void get_words(in float4 in_pos,

out float4 w0,

out float4 w1,

out float4 tag0,

out float4 tag1,

const uniform samplerRECT pkt_buffer)

{

// Read the data from the packet buffer.

float4 p = texRECT(pkt_buffer, pos.xy);

float4 p_p, n_p;

float2 pindex = float2(pos.x-1, pos.y);

float2 nindex = float2(pos.x+1, pos.y);

// Check boundary case and perform address wrapping if needed.

// PKT_MAP_X_SZ is the packet buffer size.

if (pos.x == 0)

pindex = float2(PKT_MAP_X_SZ-1, pos.y-1);

if (pos.x == PKT_MAP_X_SZ-1)

nindex = float2(0, pos.y+1);

// Read the second word from the packet buffer.

float4 p_p = texRECT(pkt_buf, pindex);

float4 n_p = texRECT(pkt_buf, nindex);

// Pack data and check for 2-word alignment.

if (floor(fmod(in_pos.x, 2.0))) {

w0 = float4(p.w, p.x, 0, 0);

tag0 = float4(p.z, p_p.w, p_p.x, p.y);

w1 = float4(n_p. z, p.w, 0, 0);

tag1 = float4(p.y, p.z, p_p.w, p.x);

} else {

w0 = float4(p.y, p.z, 0, 0);

tag0 = float4(p_p.x, p_p.y, p_p.z, p_p.w);

w1 = float4(p.x, p.y, 0, 0);

tag1 = float4(p_p.w, p_p.x, p_p.y, p.z);

}

}Example 35-2. Cg Code for Virus Signature Matching on the GPU

void signature_match(in float4 fragid : WPOS,

out float4 output_value : COLOR0,

const uniform samplerRECT pkt_buffer,

const uniform samplerRECT g_table)

{

float4 word0, word1, tag0, tag1;

// Read 2 words from the packet buffer and create tag.

get_words(fragid, word0, word1, tag0, tag1, pkt_buffer);

// Use the word values to index the virus pattern table.

float4 h0 = texRECT(g_table, word0.xy * 256);

float4 h1 = texRECT(g_table, word1.xy * 256);

// Check if all 4 of the bytes match.

float all_match0 = all(h0 == tag0);

float all_match1 = all(h1 == tag1);

output_value = float4(0, 0, 0, 0);

// Discard this output if there are no matches.

if (!(all_match0 || all_match1))

discard;

output_value.xy = all_match0 * word0.xy;

output_value.zw = all_match1 * word1.xy;

}We draw a quad primitive to execute the GPU threads with proper input addresses for reading consecutive 2-byte pairs from the input buffer. For example, if Thread A reads bytes [i, j], then Thread B reads bytes [j, k], and so on until the entire buffer is processed. If a thread detects a match, it writes a 2-byte signature identifier into the write buffer at the relative offset of the input segment. Because the identifier is 2 bytes long, the write buffer is twice the size of the input buffer and a result for input location i is written at offset 2i. This GPU workload gives each thread a short, regular execution path to take advantage of the single-instruction, multiple-data characteristics of the GPU.

The GPU threads are oblivious to the data objects in the data buffer. This allows the threads to operate independently and avoid any decision making regarding object boundaries in the data buffer. The CPU simply transfers the input data to the GPU and receives back an integer value indicating the number of possible signature matches in the buffer. The CPU is then responsible for completing the signature match verification for each result and mapping it to a particular data object.

We changed the ClamAV application to fill the data buffer completely before beginning the scan. In our version, ClamAV performs steps 1 to 3 from Section 35.2.1 until the data buffer is full and passes the full buffer and the relevant object descriptions to our library. The application fills the input data buffer before submitting it to the library, so the buffer can hold multiple files, a single complete file, a portion of a file, or some combination of these. ClamAV gives our library enough information to enable it to track multipart files, and it replicates enough data (as many bytes as the longest signature) between files to ensure that signature data spanning two buffers will be matched accurately.

We divided the work between the CPU and the GPU, so our library needs to transfer each data buffer it receives to the GPU and wait for processing to complete before using the results. Because the application and library execute in the context of the same software thread, the CPU might stand idle while the data is transferred and processed by the GPU. To keep the CPU busy, we pipelined the work by rotating among multiple data buffers. This way, almost all executions on the CPU can be performed in parallel with the GPU processing, and vice versa. The one section that can't be processed in parallel—transferring the result buffer back from the GPU if there are matches—is serialized only as an artifact of our implementation.

35.4 Results

We compared the execution of the open-source ClamAV antivirus application executing its own exact-match algorithm on the CPU against the same application calling out to our scanning library.

We point out that the original ClamAV application performs two additional steps: it detects matches against its approximately 2,000 regular-expression signatures, and it computes the MD5 hash for every file and compares it to a database of approximately 8,000 hash values. These functions are beyond the scope of the algorithm we describe here, and we removed them from our ClamAV application before comparing the performance.

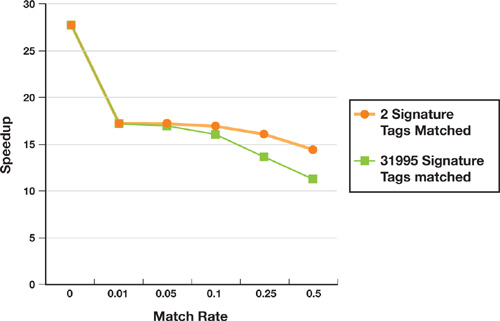

The graph in Figure 35-5 shows the speedup obtained with our GPU-based approach for a range of signature tag matches in an 8 MB data file. The hardware used for these comparisons is a 3 GHz Intel Pentium 4 with an NVIDIA GeForce 7800 GTX. If no signature tags match (the first data point on the curves), the GPU implementation outperforms the CPU by 27x. When the GPU finds matching tags in the input data, the performance drops sharply because of the overhead incurred when the result buffer is copied back to the CPU.

Figure 35-5 ClamAV (GPU) Speedup over a Range of Signature Tag Match Rates

The match rates represent the percentage of 64-byte segments in the input data that contain a valid signature tag. The speedup drops from 17x to 11x as the match rate increases because the CPU must do additional verification work. The two curves compare the effects of the number of individual signatures matched. As the number of matches goes up and the number of individual signatures identified grows, performance degrades noticeably because thousands of randomly ordered individual signature strings are fetched into the CPU caches for comparison.

In a practical setting, most input data for real systems contain few, if any, signature matches, so the speedup from the GPU is significant. However, it would be useful to mitigate the performance effects of signature tag matches not only for the infrequent case where full virus signatures are matched, but also for cases where the GPU reports false positives—that is, the data contains the signature tag but not the full signature.

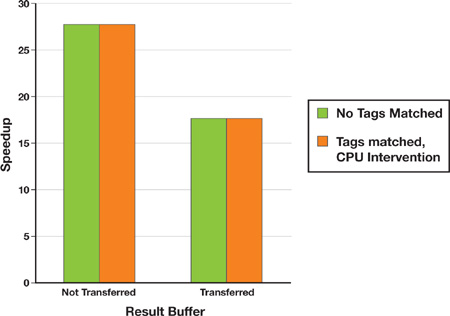

When the CPU must verify the GPU results, it incurs three kinds of overhead: the time to copy the GPU result buffer back to the CPU, the processing time to walk through the result buffer, and the time to verify each positive result. Although the results reported in Figure 35-5 conflate the effects of all three overheads, the bar chart in Figure 35-6 helps to set them apart. The "No tags matched" bars compare the original zero-match case with, and without, the result buffers being copied to the CPU. For low match rates, almost all the additional overhead is incurred by the buffer transfers.

Figure 35-6 Managing Performance for 50 Percent Tag Match Rate and 31,995 Signature Tags Matched

The "Tags matched, CPU intervention" bars compare two techniques for reducing CPU processing time for high match rates, demonstrated on the worst case (50 percent match rate and 31,995 signature tags) from Figure 35-5. In the first approach, the CPU compares the total number of results written to the buffer against a threshold value. If the results exceed the threshold, the CPU opts to declare the entire block of input data as bad (or suspect) without verifying the matches, thereby avoiding the result copy and the processing. This option is useful for input blocks of one object or a group of related objects (such as packets in a stream). In the second approach, the CPU copies the result buffer and processes it while tallying the results returned for each object in the buffer. Once an object exceeds a threshold, the CPU marks the object and skips over its remaining results; at the start of the next object, the CPU begins processing again.

For the outcome we present here, each 512-byte segment of input data is designated as an individual object. Although the result copy still reduces the gain from 27x to 17x, the reduced number of result buffer reads of signature verifications no longer degrades the performance. This conclusion is consistent with our observations that although the CPU and GPU execute in parallel, the workload on the GPU is much greater than that on the CPU in the zero-match case, leaving the CPU frequently idle.

Our implementation does not pipeline the write-buffer transfer, and neither the CPU nor the GPU can perform work during that time. A multithreaded system could avoid some of that overhead by allowing the CPU to continue executing while the transfer is taking place. The CPU processing overhead, which can degrade performance for high match rates, can be diminished by halting work on a particular data object or group of related objects as long as it is acceptable to report some number of false positives.

35.5 Conclusions and Future Work

Our implementation of a fast data-scanning library suggests the GPU can be effectively employed for accelerating virus signature detection over real networks. Although we have successfully exploited the power of the GPU to act as a pattern filter, our simple algorithm is not very scalable in three key areas. For example, it supports, at most, 64,000 signatures (the size of the GPU signature array), which is problematic for larger virus databases. In addition, it requires each signature to have a unique 6-byte sequence of characters (including a unique 2-byte array index), and this requirement obviously limits the space of viruses we can detect. Finally, it supports only fixed-character signatures.

If we change the GPU signature data structure to hold more signatures, it will occupy a larger footprint and possibly require more memory reads to navigate the structure. Both these changes can potentially degrade memory performance. Alternatively, we could make the GPU filter coarser by mapping individual GPU array entries to multiple signatures. In this scheme, the CPU would disambiguate between signatures; however, this approach returns some of the signature-matching work to the CPU, which could degrade the CPU cache performance and throughput and, in some cases, slow the performance of the full system.

Our algorithm would easily extend to support the simple regular-expression signatures found in the ClamAV database, but most of the effort would occur on the CPU. ClamAV regular-expression signatures have fixed-character segments separated by a variable number of wildcard characters, but they do not include recursion. We can use the GPU to identify probable matches of the fixed-character segments as it does now. Then we could use the CPU to execute the remaining work by verifying that each segment in the signature has a probable match and that each segment match is a true positive. After verifying these matches, the CPU would run a coarse-grained state machine to determine whether the signature is completely matched. This way, the GPU is not responsible for speculatively reading a large number of input data bytes or for keeping state across multiple data buffers.

35.6 References

The Clam AV software we used is available at http://www.clamav.org.

- Contributors

- Foreword

- Part I: Geometry

-

- Chapter 1. Generating Complex Procedural Terrains Using the GPU

- Chapter 2. Animated Crowd Rendering

- Chapter 3. DirectX 10 Blend Shapes: Breaking the Limits

- Chapter 4. Next-Generation SpeedTree Rendering

- Chapter 5. Generic Adaptive Mesh Refinement

- Chapter 6. GPU-Generated Procedural Wind Animations for Trees

- Chapter 7. Point-Based Visualization of Metaballs on a GPU

- Part II: Light and Shadows

-

- Chapter 8. Summed-Area Variance Shadow Maps

- Chapter 9. Interactive Cinematic Relighting with Global Illumination

- Chapter 10. Parallel-Split Shadow Maps on Programmable GPUs

- Chapter 11. Efficient and Robust Shadow Volumes Using Hierarchical Occlusion Culling and Geometry Shaders

- Chapter 12. High-Quality Ambient Occlusion

- Chapter 13. Volumetric Light Scattering as a Post-Process

- Part III: Rendering

-

- Chapter 14. Advanced Techniques for Realistic Real-Time Skin Rendering

- Chapter 15. Playable Universal Capture

- Chapter 16. Vegetation Procedural Animation and Shading in Crysis

- Chapter 17. Robust Multiple Specular Reflections and Refractions

- Chapter 18. Relaxed Cone Stepping for Relief Mapping

- Chapter 19. Deferred Shading in Tabula Rasa

- Chapter 20. GPU-Based Importance Sampling

- Part IV: Image Effects

-

- Chapter 21. True Impostors

- Chapter 22. Baking Normal Maps on the GPU

- Chapter 23. High-Speed, Off-Screen Particles

- Chapter 24. The Importance of Being Linear

- Chapter 25. Rendering Vector Art on the GPU

- Chapter 26. Object Detection by Color: Using the GPU for Real-Time Video Image Processing

- Chapter 27. Motion Blur as a Post-Processing Effect

- Chapter 28. Practical Post-Process Depth of Field

- Part V: Physics Simulation

-

- Chapter 29. Real-Time Rigid Body Simulation on GPUs

- Chapter 30. Real-Time Simulation and Rendering of 3D Fluids

- Chapter 31. Fast N-Body Simulation with CUDA

- Chapter 32. Broad-Phase Collision Detection with CUDA

- Chapter 33. LCP Algorithms for Collision Detection Using CUDA

- Chapter 34. Signed Distance Fields Using Single-Pass GPU Scan Conversion of Tetrahedra

- Chapter 35. Fast Virus Signature Matching on the GPU

- Part VI: GPU Computing

-

- Chapter 36. AES Encryption and Decryption on the GPU

- Chapter 37. Efficient Random Number Generation and Application Using CUDA

- Chapter 38. Imaging Earth's Subsurface Using CUDA

- Chapter 39. Parallel Prefix Sum (Scan) with CUDA

- Chapter 40. Incremental Computation of the Gaussian

- Chapter 41. Using the Geometry Shader for Compact and Variable-Length GPU Feedback

- Preface