GPU Gems 3

GPU Gems 3 is now available for free online!

The CD content, including demos and content, is available on the web and for download.

You can also subscribe to our Developer News Feed to get notifications of new material on the site.

Chapter 19. Deferred Shading in Tabula Rasa

Rusty Koonce

NCsoft Corporation

This chapter is meant to be a natural extension of "Deferred Shading in S.T.A.L.K.E.R." by Oles Shishkovtsov in GPU Gems 2 (Shishkovtsov 2005). It is based on two years of work on the rendering engine for the game Tabula Rasa, a massively multiplayer online video game (MMO) designed by Richard Garriott. While Shishkovtsov 2005 covers the fundamentals of implementing deferred shading, this chapter emphasizes higher-level issues, techniques, and solutions encountered while working with a deferred shading based engine.

19.1 Introduction

In computer graphics, the term shading refers to the process of rendering a lit object. This process includes the following steps:

- Computing geometry shape (that is, the triangle mesh)

- Determining surface material characteristics, such as the normal, the bidirectional reflectance distribution function, and so on

- Calculating incident light

- Computing surface/light interaction, yielding the final visual

Typical rendering engines perform all four of these steps at one time when rendering an object in the scene. Deferred shading is a technique that separates the first two steps from the last two, performing each at separate discrete stages of the render pipeline.

In this chapter, we assume the reader has a basic understanding of deferred shading. For an introduction to deferred shading, refer to Shishkovtsov 2005, Policarpo and Fonseca 2005, Hargreaves and Harris 2004, or another such resource.

In this chapter, the term forward shading refers to the traditional shading method in which all four steps in the shading process are performed together. The term effect refers to a Direct3D D3DX effect. The terms technique, annotation, and pass are used in the context of a D3DX effect.

The term material shader refers to an effect used for rendering geometry (that is, in the first two steps of the shading process) and light shader refers to an effect used for rendering visible light (part of the last two steps in the shading process). A body is a geometric object in the scene being rendered.

We have avoided GPU-specific optimizations or implementations in this chapter; all solutions are generic, targeting either Shader Model 2.0 or Shader Model 3.0 hardware. In this way, we hope to emphasize the technique and not the implementation.

19.10 Conclusion

Deferred shading has progressed from theoretical to practical. Many times new techniques are too expensive, too abstract, or just too impractical to be used outside of a tightly scoped demo. Deferred shading has proven to be a versatile, powerful, and manageable technique that can work in a real game environment.

The main drawbacks of deferred shading include the following:

- High memory bandwidth usage

- No hardware antialiasing

- Lack of proper alpha-blending support

We have found that current midrange hardware is able to handle the memory bandwidth requirements at modest resolution, with current high-end hardware able to handle higher resolutions with all features enabled. With DirectX 10 hardware, MRT performance has been improved significantly by both ATI and NVIDIA. DirectX 10 and Shader Model 4.0 also provide integer operations in pixel shaders as well as read access to the depth buffer, both of which can be used to reduce memory bandwidth usage. Performance should only continue to improve as new hardware and new features become available.

Reliable edge detection combined with proper filtering can significantly minimize aliasing artifacts around geometry edges. Although these techniques are not as accurate as the subsampling found in hardware full-scene antialiasing, the method still produces results that trick the eye into smoothing hard edges.

The primary outstanding issue with deferred shading is the lack of alpha-blending support. We consciously sacrificed some visual quality related to no transparency support while in the deferred pipeline. However, we felt overall the gains from using deferred shading outweighed the issues.

The primary benefits of deferred shading include the following:

- Lighting cost is independent of scene complexity.

- Shaders have access to depth and other pixel information.

- Each pixel is lit only once per light. That is, no lighting is computed on pixels that later become occluded by other opaque geometry.

- Clean separation of shader code: material rendering is separated from lighting computations.

Every day, new techniques and new hardware come out, and with them, the desirability of deferred shading may go up or down. The future is hard to predict, but we are happy with our choice to use deferred shading in the context of today's hardware.

19.11 References

Hargreaves, Shawn, and Mark Harris. 2004. "6800 Leagues Under the Sea: Deferred Shading." Available online at http://developer.nvidia.com/object/6800_leagues_deferred_shading.html.

Kozlov, Simon. 2004. "Perspective Shadow Maps: Care and Feeding." In GPU Gems, edited by Randima Fernando, pp. 217–244.

Martin, Tobias, and Tiow-Seng Tan. 2004. "Anti-aliasing and Continuity with Trapezoidal Shadow Maps." In Eurographics Symposium on Rendering Proceedings 2004, pp. 153–160.

Policarpo, Fabio, and Francisco Fonseca. 2005. "Deferred Shading Tutorial." Available online at http://fabio.policarpo.nom.br/docs/Deferred_Shading_Tutorial_SBGAMES2005.pdf.

Shishkovtsov, Oles. 2005. "Deferred Shading in S.T.A.L.K.E.R." In GPU Gems 2, edited by Matt Pharr, pp.143–166. Addison-Wesley.

Sousa, Tiago. 2005. "Generic Refraction Simulation." In GPU Gems 2, edited by Matt Pharr, pp. 295–306. Addison-Wesley.

Stamminger, Marc, and George Drettakis. 2002. "Perspective Shadow Maps." In ACM Transactions on Graphics (Proceedings of SIGGRAPH 2002) 21(3), pp. 557–562.

I would like to thank all of the contributors to this chapter, including a few at NCsoft Austin who also have helped make our rendering engine possible: Sean Barton, Tom Gambill, Aaron Otstott, John Styes, and Quoc Tran.

19.2 Some Background

In Tabula Rasa, our original rendering engine was a traditional forward shading engine built on top of DirectX 9, using shaders built on HLSL D3DX effects. Our effects used pass annotations within the techniques that described the lighting supported by that particular pass. The engine on the CPU side would determine what lights affected each body. This information, along with the lighting data in the effect pass annotations, was used to set light parameters and invoke each pass the appropriate number of times.

This forward shading approach has several issues:

- Computing which lights affect each body consumes CPU time, and in the worst case, it becomes an O(n x m) operation.

- Shaders often require more than one render pass to perform lighting, with complicated shaders requiring worst-case O(n) render passes for n lights.

- Adding new lighting models or light types requires changing all effect source files.

- Shaders quickly encounter the instruction count limit of Shader Model 2.0.

Working on an MMO, we do not have tight control over the game environment. We can't control how many players are visible at once or how many visual effects or lights may be active at once. Given our lack of control of the environment and the poor scalability of lighting costs within a forward renderer, we chose to pursue a deferred-shading renderer. We felt this could give us visuals that could match any top game engine while making our lighting costs independent of scene geometric complexity.

The deferred shading approach offers the following benefits:

- Lighting costs are independent of scene complexity; there is no overhead of determining what lights affect what body.

- There are no additional render passes on geometry for lighting, resulting in fewer draw calls and fewer state changes required to render the scene.

- New light types or lighting models can be added without requiring any modification to material shaders.

- Material shaders do not perform lighting, freeing up instructions for additional geometry processing.

Deferred shading requires multiple render target (MRT) support and utilizes increased memory bandwidth, making the hardware requirements for deferred shading higher than what we wanted our minimum specification to be. Because of this, we chose to support both forward and deferred shading. We leveraged our existing forward shading renderer and built on top of it our deferred rendering pipeline.

With a full forward shading render pipeline as a fallback, we were able to raise our hardware requirements for our deferred shading pipeline. We settled on requiring Shader Model 2.0 hardware for our minimum specification and forward rendering pipeline, but we chose to require Shader Model 3.0 hardware for our deferred shading pipeline. This made development of the deferred pipeline much easier, because we were no longer limited in instruction counts and could rely on dynamic branching support.

19.3 Forward Shading Support

Even with a deferred shading-based engine, forward shading is still required for translucent geometry (see Section 19.8 for details). We retained support for a fully forward shaded pipeline within our renderer. Our forward renderer is used for translucent geometry as well as a fallback pipeline for all geometry on lower-end hardware.

This section describes methods we used to make simultaneous support for both forward and deferred shading pipelines more manageable.

19.3.1 A Limited Feature Set

We chose to limit the lighting features of our forward shading pipeline to a very small subset of the features supported by the deferred shading pipeline. Some features could not be supported for technical reasons, some were not supported due to time constraints, but many were not supported purely to make development easier.

Our forward renderer supports only hemispheric, directional, and point lights, with point lights being optional. No other type of light is supported (such as spotlights and box lights, both of which are supported by our deferred renderer). Shadows and other features found in the deferred pipeline were not supported in our forward pipeline.

Finally, the shader in the forward renderer could do per-vertex or per-pixel lighting. In the deferred pipeline, all lighting is per-pixel.

19.3.2 One Effect, Multiple Techniques

We have techniques in our effects for forward shading, deferred shading, shadow-map rendering, and more. We use an annotation on the technique to specify which type of rendering the technique was written for. This allows us to put all shader code in a single effect file that handles all variations of a shader used by the rendering engine. See Listing 19-1. This includes techniques for forward shading static and skinned geometry, techniques for "material shading" static and skinned geometry in our deferred pipeline, as well as techniques for shadow mapping.

Having all shader code for one effect in a single place allows us to share as much of that code as possible across all of the different techniques. Rather than using a single, monolithic effect file, we broke it down into multiple shader libraries, source files that contain shared vertex and pixel programs and generic functions, that are used by many effects. This approach minimized shader code duplication, making maintenance easier, decreasing the number of bugs, and improving consistency across shaders.

19.3.3 Light Prioritization

Our forward renderer quickly generates additional render passes as more lights become active on a piece of geometry. This generates not only more draw calls, but also more state changes and more overdraw. We found that our forward renderer with just a fraction of the lights enabled could be slower than our deferred renderer with many lights enabled. So to maximize performance, we severely limited how many lights could be active on a single piece of geometry in the forward shading pipeline.

Example 19-1. Sample Material Shader Source

// These are defined in a common header, or definitions

// can be passed in to the effect compiler.

#define RM_FORWARD

1 #define RM_DEFERRED 2 #define TM_STATIC 1 #define TM_SKINNED 2 // Various

// techniques

// are

// defined,

// each using

// annotations

// to

// describe

// the render mode and the transform mode supported by the technique.

technique ExampleForwardStatic < int render_mode = RM_FORWARD;

int transform_mode = TM_STATIC;

> {...} technique ExampleForwardSkinned < int render_mode = RM_FORWARD;

int transform_mode = TM_SKINNED;

> {...} technique ExampleDeferredStatic < int render_mode = RM_DEFERRED;

int transform_mode = TM_STATIC;

> {...} technique ExampleDeferredSkinned < int render_mode = RM_DEFERRED;

int transform_mode = TM_SKINNED;

> { ... }Our deferred rendering pipeline can handle thirty, forty, fifty, or more active dynamic lights in a single frame, with the costs being independent of the geometry that is affected. However, our forward renderer quickly bogs down when just a couple of point lights start affecting a large amount of geometry. With such a performance discrepancy between the two pipelines, using the same lights in both pipelines was not possible.

We gave artists and designers the ability to assign priorities to lights and specify if a light was to be used by the forward shading pipeline, the deferred shading pipeline, or both. A light's priority is used in both the forward and the deferred shading pipelines whenever the engine needs to scale back lighting for performance. With the forward shading pipeline, scaling back a light simply means dropping it from the scene; however, in the deferred shading pipeline, a light could have shadows disabled or other expensive properties scaled back based on performance, quality settings, and the light's priority.

In general, maps were lit targeting the deferred shading pipeline. A quick second pass was made to ensure that the lighting in the forward shading pipeline was acceptable. Generally, the only additional work was to increase ambient lighting in the forward pipeline to make up for having fewer lights than in the deferred pipeline.

19.4 Advanced Lighting Features

All of the following techniques are possible in a forward or deferred shading engine. We use all of these in our deferred shading pipeline. Even though deferred shading is not required, it made implementation much cleaner. With deferred shading, we kept the implementation of such features separate from the material shaders. This meant we could add new lighting models and light types without having to modify material shaders. Likewise, we could add material shaders without any dependency on lighting models or light types.

19.4.1 Bidirectional Lighting

Traditional hemispheric lighting as described in the DirectX documentation is fairly common. This lighting model uses two colors, traditionally labeled as top and bottom, and then linearly interpolates between these two colors based on the surface normal. Typically hemispheric lighting interpolates the colors as the surface normal moves from pointing directly up to directly down (hence the terms top and bottom). In Tabula Rasa, we support this traditional hemispheric model, but we also added a back color to directional lights.

With deferred lighting, artists are able to easily add multiple directional lights. We found them adding a second directional light that was aimed in nearly the opposite direction of the first, to simulate bounce or radiant light from the environment. They really liked the look and the control this gave them, so the natural optimization was to combine the two opposing directional lights into a single, new type of directional light—one with a forward color and a back color. This gave them the same control at half of the cost.

As a further optimization, the back color is just a simple N · L, or a simple Lambertian light model. We do not perform any specular, shadowing, occlusion, or other advanced lighting calculation on it. This back color is essentially a cheap approximation of radiant or ambient light in the scene. We save N · L computed from the front light and just negate it for the back color.

19.4.2 Globe Mapping

A globe map is a texture used to color light, like a glass globe placed around a light source in real life. As a ray of light is emitted from the light source, it must pass through this globe, where it becomes colored or blocked. For point lights, we use a cube map for this effect. For spotlights, we use 2D texture. These can be applied to cheaply mimic stained-glass effects or block light in specific patterns. We also give artists the ability to rotate or animate these globe maps.

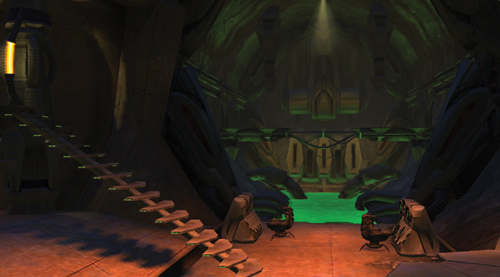

Artists use globe maps to cheaply imitate shadow maps when possible, to imitate stainedg-lass effects, disco ball reflection effects, and more. All lights in our engine support them. See Figures 19-1 through 19-3 for an example of a globe map applied to a light.

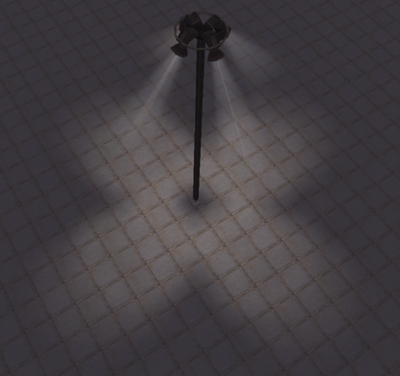

Figure 19-1 A Basic Spotlight

Figure 19-2 A Simple Globe Map

Figure 19-3 A Spotlight with Globe Map

19.4.3 Box Lights

In Tabula Rasa, directional lights are global lights that affect the entire scene and are used to simulate sunlight or moonlight. We found artists wanting to light a small area with a directional light, but they did not want the light to affect the entire scene. What they needed were localized directional lights.

Our solution for a localized directional light was a box light. These lights use our basic directional lighting model, but they are confined within a rectangular volume. They support falloff like spotlights, so their intensity can fade out as they near the edge of their light volume. Box lights also support shadow maps, globe maps, back color, and all of the other features of our lighting engine.

19.4.4 Shadow Maps

There is no precomputed lighting in Tabula Rasa. We exclusively use shadow maps, not stencil shadows or light maps. Artists can enable shadow casting on any light (except hemispheric). We use cube maps for point light shadow maps and 2D textures for everything else.

All shadow maps currently in Tabula Rasa are floating-point textures and utilize jitter sampling to smooth out the shadows. Artists can control the spread of the jitter sampling, giving control over how soft the shadow appears. This approach allowed us to write a single solution that worked and looked the same on all hardware; however, hardware-specific texture formats can be used as well for shadow maps. Hardware-specific formats can provide benefits such as better precision and hardware filtering.

Global Shadow Maps

Many papers exist on global shadow mapping, or shadow mapping the entire scene from the perspective of a single directional light. We spent a couple of weeks researching and playing with perspective shadow maps (Stamminger and Drettakis 2002) and trapezoidal shadow maps (Martin and Tan 2004). The downfall of these techniques is that the final result depends on the angle between the light direction and the eye direction. In both methods, as the camera moves, the shadow quality varies, with the worst case reducing to the standard orthographic projection.

In Tabula Rasa, there is a day and night cycle, with the sun and moon constantly moving across the sky. Dusk and dawn are tricky because the light direction comes close to being parallel to the ground, which largely increases the chance of the eye direction becoming parallel to the light direction. This is the worst-case scenario for perspective and trapezoidal shadow maps.

Due to the inconsistent shadow quality as the camera or light source moved, we ended up using a single large 2048x2048 shadow map with normal orthographic projection. This gave us consistent results that were independent of the camera or light direction. However, new techniques that we have not tried may work better, such as cascaded shadow maps.

We used multisample jitter sampling to soften shadow edges, we quantized the position of the light transform so it always pointed to a consistent location within a subpixel of the shadow map, and we quantized the direction of the light so the values going into the shadow map were not minutely changing every frame. This gave us a stable shadow with a free-moving camera. See Listing 19-2.

Example 19-2. C++ Code That Quantizes Light Position for Building the Shadow Map Projection Matrix

// Assumes a square shadow map and square shadow view volume.

// Compute how "wide" a pixel in the shadow map is in world space.

const float pixelSize =

viewSize /

shadowMapWidth; // How much has our light position changed since last frame?

vector3 delta(lightPos - lastLightPos); // Project the delta onto the basis

// vectors of the light matrix.

float xProj = dot(delta, lightRight);

float yProj = dot(delta, lightUp);

float zProj = dot(

delta, lightDir); // Quantize the projection to the nearest integral value.

// (How many "pixels" across and up has the light moved?)

const int numStepsX = static_cast<int>(xProj / pixelSize);

const int numStepsY = static_cast<int>(

yProj / pixelSize); // Go ahead and quantize "z" or the light direction.

// This value affects the depth components going into the shadowmap.

// This will stabilize the shadow depth values and minimize

// shadow bias aliasing.

const float zQuantization = 0.5f;

const int numStepsZ = static_cast<int>(

zProj /

zQuantization); // Compute the new light position that retains the same

// subpixel

// location as the last light position. lightPos = lastLightPos + (pixelSize *

// numStepsX) * lgtRight +

(pixelSize *numStepsY) * lgtUp + (zQuantization * numStepsZ) * lgtDir;Local Shadow Maps

Because any light can cast shadows in our engine, with maps having hundreds of lights, the engine must manage the creation and use of many shadow maps. All shadow maps are generated on the fly as they are needed. However, most shadow maps are static and do not need to be regenerated each frame. We gave artists control over this by letting them set a flag on each shadow-casting light if they wanted to use a static shadow map or a dynamic shadow map. Static shadow maps are built only once and reused each frame; dynamic shadow maps are rebuilt each frame.

We flag geometry as static or dynamic as well, indicating if the geometry moves or not at runtime. This allows us to cull geometry based on this flag. When building static shadow maps, we cull out dynamic geometry. This prevents a shadow from a dynamic object, such as an avatar, from getting "baked" into a static shadow map. However, dynamic geometry is shadowed just like static geometry by these static shadow maps. For example, an avatar walking under a staircase will have the shadows from the staircase fall across it.

A lot of this work can be automated and optimized. We chose not to prebuild static shadow maps; instead, we generate them on the fly as we determine they are needed. This means we do not have to ship and patch shadow map files, and it reduces the amount of data we have to load from disk when loading or running around a map. To combat video memory usage and texture creation overhead, we use a shadow map pool. We give more details on this later in the chapter.

Dynamic shadow-casting lights are the most expensive, because they have to constantly regenerate their shadow maps. If a dynamic shadow-casting light doesn't move, or doesn't move often, several techniques can be used to help improve its performance. The easiest is to not regenerate one of these dynamic shadow maps unless a dynamic piece of geometry is within its volume. The other option is to render the static geometry into a separate static shadow map that is generated only once. Each frame it is required, render just the dynamic geometry to a separate dynamic shadow map. Composite these two shadow maps together by comparing values from each and taking the lowest, or nearest, value. The final result will be a shadow map equivalent to rendering all geometry into it, but only dynamic geometry is actually rendered.

19.4.5 Future Expansion

With all lighting functionality cleanly separated from geometry rendering, modifying or adding lighting features is extremely easy with a deferred shading-based engine. In fact, box lights went from a proposal on a whiteboard to fully functional with complete integration into our map editor in just three days.

High dynamic range, bloom, and other effects are equally just as easy to add to a deferred shading-based engine as to a forward-based one. The architecture of a deferred shading pipeline lends itself well to expansion in most ways. Typically, adding features to a deferred engine is easier or not any harder than it would be for a forward shading-based engine. The issue that is most likely to constrain the feature set of a deferred shading engine is the limited number of material properties that can be stored per pixel, available video memory, and video memory bandwidth.

19.5 Benefits of a Readable Depth and Normal Buffer

A requirement of deferred shading is building textures that hold depth and normal information. These are used for lighting the scene; however, they can be used outside of the scope of lighting for various visual effects such as fog, depth blur, volumetric particles, and removing hard edges where alpha-blended geometry intersects opaque geometry.

19.5.1 Advanced Water and Refraction

In Tabula Rasa, if using the deferred shading pipeline, our water shader takes into account water depth (in eye space). As each pixel of the water is rendered, the shader samples the depth saved from the deferred shading pipeline and compares it to the depth of the water pixel. This means our water can auto-shoreline, the water can change color and transparency with eye-space depth, and pixels beneath the water refract whereas pixels above the water do not. It also means that we can do all of these things in a single pass, unlike traditional forward renderers.

Our forward renderer supports only our basic refraction feature, and it requires an additional render pass to initialize the alpha channel of the refraction texture in order to not refract pixels that are not underneath the water. This basic procedure is outlined in Sousa 2005.

In our deferred renderer, we can sample the eye depth of the current pixel and the eye depth of the neighboring refracted pixel. By comparing these depths, we can determine if the refracted pixel is indeed behind the target pixel. If it is, we proceed with the refraction effect; otherwise we do not. See Figures 19-4 and 19-5.

Figure 19-4 Water Using Forward Shading Only

Figure 19-5 Water Using Forward Shading, but with Access to the Depth Buffer from Deferred Shading Opaque Geometry

To give the artist control over color and transparency with depth, we actually use a volume texture as well as a 1D texture. The 1D texture is just a lookup table for transparency with the normalized water depth being used for the texture coordinate. This technique allowed artists to easily simulate a nonlinear relationship between water depth and its transparency. The volume texture was actually used for the water surface color. This could be a flat volume texture (becoming a regular 2D texture) or it could have two or four W layers. Again, the normalized depth was used for the W texture coordinate with the UV coordinates being specified by the artists. The surface normal of the water was driven by two independently UV-animated normal maps.

19.5.2 Resolution-Independent Edge Detection

Shishkovtsov 2005 presented a method for edge detection that was used for faking an antialiasing pass on the frame. The implementation relied on some magic numbers that varied based on resolution. We needed edge detection for antialiasing as well; however, we modified the algorithm to make the implementation resolution independent.

We looked at changes in depth gradients and changes in normal angles by sampling all eight neighbors surrounding a pixel. This is basically the same as Shishkovtsov's method. We diverge at this point and compare the maximum change in depth to the minimum change in depth to determine how much of an edge is present. This depth gradient between pixels is resolution dependent. By comparing relative changes in this gradient instead of comparing the gradient to fixed values, we are able to make the logic resolution independent.

Our normal processing is very similar to Shishkovtsov's method. We compare the changes in the cosine of the angle between the center pixel and its neighboring pixels along the same edges at which we test depth gradients. We use our own constant number here; however, the change in normals across pixels is not resolution dependent. This keeps the logic resolution independent.

We do not put any logic in the algorithm to limit the selection to "top right" or "front" edges; consequently, many edges become a couple of pixels wide. However, this works out well with our filtering method to help smooth those edges.

The output of the edge detection is a per-pixel weight between zero and one. The weight reflects how much of an edge the pixel is on. We use this weight to do four bilinear samples when computing the final pixel color. The four samples we take are at the pixel center for a weight of zero and at the four corners of the pixel for a weight of one. This results in a weighted average of the target pixel with all eight of its neighbors. The more of an edge a pixel is, the more it is blended with its neighbors. See Listing 19-3.

Example 19-3. Shader Source for Edge Detection

////////////////////////////

// Neighbor offset table

////////////////////////////

const static float2 offsets[9] = {float2(0.0, 0.0),

// Center

0 float2(-1.0, -1.0), // Top Left

1 float2(0.0, -1.0),

// Top

2 float2(1.0, -1.0),

// Top Right

3 float2(1.0,

0.0), // Right

4 float2(1.0,

1.0), // Bottom Right 5

float2(0.0,

1.0), // Bottom

6 float2(-1.0,

1.0), // Bottom Left

7 float2(-1.0, 0.0)

// Left

8};

float DL_GetEdgeWeight(in float2 screenPos)

{

float Depth[9];

float3 Normal[9];

// Retrieve normal and depth data for all neighbors.

for (int i = 0; i < 9; ++i)

{

float2 uv = screenPos + offsets[i] * PixelSize;

Depth[i] = DL_GetDepth(uv);

// Retrieves depth from MRTs

Normal[i] = DL_GetNormal(uv); // Retrieves normal from MRTs

}

// Compute Deltas in Depth.

float4 Deltas1;

float4 Deltas2;

Deltas1.x = Depth[1];

Deltas1.y = Depth[2];

Deltas1.z = Depth[3];

Deltas1.w = Depth[4];

Deltas2.x = Depth[5];

Deltas2.y = Depth[6];

Deltas2.z = Depth[7];

Deltas2.w = Depth[8];

// Compute absolute gradients from center.

Deltas1 = abs(Deltas1 - Depth[0]);

Deltas2 = abs(Depth[0] - Deltas2);

// Find min and max gradient, ensuring min != 0

float4 maxDeltas = max(Deltas1, Deltas2);

float4 minDeltas = max(min(Deltas1, Deltas2), 0.00001);

// Compare change in gradients, flagging ones that change

// significantly.

// How severe the change must be to get flagged is a function of the

// minimum gradient. It is not resolution dependent. The constant

// number here would change based on how the depth values are stored

// and how sensitive the edge detection should be.

float4 depthResults = step(minDeltas * 25.0, maxDeltas);

// Compute change in the cosine of the angle between normals.

Deltas1.x = dot(Normal[1], Normal[0]);

Deltas1.y = dot(Normal[2], Normal[0]);

Deltas1.z = dot(Normal[3], Normal[0]);

Deltas1.w = dot(Normal[4], Normal[0]);

Deltas2.x = dot(Normal[5], Normal[0]);

Deltas2.y = dot(Normal[6], Normal[0]);

Deltas2.z = dot(Normal[7], Normal[0]);

Deltas2.w = dot(Normal[8], Normal[0]);

Deltas1 = abs(Deltas1 - Deltas2);

// Compare change in the cosine of the angles, flagging changes

// above some constant threshold. The cosine of the angle is not a

// linear function of the angle, so to have the flagging be

// independent of the angles involved, an arccos function would be

// required.

float4 normalResults = step(0.4, Deltas1);

normalResults = max(normalResults, depthResults);

return (normalResults.x + normalResults.y + normalResults.z +

normalResults.w) *

0.25;

}19.6 Caveats

19.6.1 Material Properties

Choose Properties Wisely

In Tabula Rasa we target DirectX 9, Shader Model 3.0-class hardware for our deferred shading pipeline. This gives us a large potential user base, but at the same time there are constraints that DirectX 10, Shader Model 4.0-class hardware can alleviate. First and foremost is that most Shader Model 3.0-class hardware is limited to a maximum of four simultaneous render targets without support for independent render target bit depths. This restricts us to a very limited number of data channels available for storing material attributes.

Assuming a typical DirectX 9 32-bit multiple render target setup with four render targets, one exclusively for depth, there are 13 channels available to store pixel properties: 3 four-channel RGBA textures, and one 32-bit high-precision channel for depth. Going with 64-bit over 32-bit render targets adds precision, but not necessarily any additional data channels.

Even though most channels are stored as an ordinal value in a texture, in Shader Model 3.0, all access to that data is via floating-point registers. That means using bit masking or similar means of compressing or storing more data into a single channel is really not feasible under Shader Model 3.0. Shader Model 4.0 does support true integer operations, however.

It is important that these channels hold generic material data that maximizes how well the engine can light each pixel from any type of light. Try to avoid data that is specific to a particular type of light. With such a limited number of channels, each should be considered a very valuable resource and utilized accordingly.

There are some common techniques to help compress or reduce the number of data channels required for material attributes. Storing pixel normals in view space will allow storing the normal in two channels instead of three. In view space, the z component of the normals will all have the same sign (all visible pixels face the camera). Utilizing this information, along with the knowledge that every normal is a unit vector, we can reconstruct the z component from the x and y components of the normal. Another technique is to store material attributes in a texture lookup table, and then store the appropriate texture coordinate(s) in the MRT data channels.

These material attributes are the "glue" that connects material shaders to light shaders. They are the output of material shaders and are part of the input into the light shaders.

As such, these are the only shared dependency of material and light shaders. As a result, changing material attribute data can necessitate changing all shaders, material and light alike.

Encapsulate and Hide MRT Data

We do not expose the data channel or the data format of a material attribute to the material or light shaders. Functions are used for setting and retrieving all material attribute data. This allows any data location or format to change, and the material and light shaders only need to be rebuilt, not modified.

We also use a function to initialize all MRT data in every material shader. This does possibly add unnecessary instructions, but it also allows us to add new data channels in the future, and it saves us from having to modify existing material shaders. The material shader would only need to be modified if it needed to change the default value for the newly added material attribute. See Listing 19-4.

Example 19-4. Encapsulate and Hide MRT Layout from Material Shaders

// Put all of the material attribute layout information in its own

// header file and include this header from material and light

// shaders. Provide accessor and mutator functions for each

// material attribute and use those functions exclusively for

// accessing the material attribute data in the MRTs.

// Deferred lighting material shader output

struct DL_PixelOutput

{

float4 mrt_0 : COLOR0;

float4 mrt_1 : COLOR1;

float4 mrt_2 : COLOR2;

float4 mrt_3 : COLOR3;

}; // Function to initialize material output to default values

void DL_Reset(out DL_PixelOutput frag)

{

// Set all material attributes to suitable default values

frag.mrt_0 = 0;

frag.mrt_1 = 0;

frag.mrt_2 = 0;

frag.mrt_3 = 0;

} // Mutator/Accessor – Any data conversion/compression should be done

// here to keep it and the exact storage specifics abstracted and

// hidden from shaders

void DL_SetDiffuse(inout DL_PixelOutput frag, in float3 diffuse)

{

frag.mrt_0.rgb = diffuse;

}

float3 DL_GetDiffuse(in float2 coord)

{

return tex2D(MRT_Sampler_0, coord).rgb;

}

//...

// Example material shader

DL_PixelOutput psProgram(INPUT input)

{

DL_PixelOutput output;

// Initialize output with default values

DL_Reset(output);

// Override default values with properties

// specific to this shader.

DL_SetDiffuse(output, input.diffuse);

DL_SetDepth(output, input.pos);

DL_SetNormal(output, input.normal);

return output;

}19.6.2 Precision

With deferred shading, it is easy to run into issues that result from a loss of data precision. The most obvious place for loss of precision is with the storing of material attributes in the MRT data channels. In Tabula Rasa, most data channels are 8-bit or 16-bit, depending on whether 32-bit or 64-bit render targets are being used, respectively (four channels per render target). The internal hardware registers have different precisions and internal formats from the render target channel, requiring conversion upon read and write from that channel. For example, our normals are computed with the hardware's full precision per component, but then they get saved with only 8-bit or 16-bit precision per component. With 8-bit precision, our normals do not yield smooth specular highlights and aliasing is clearly visible in the specular lighting.

19.7 Optimizations

With deferred shading, the performance of lighting is directly proportional to the number of pixels on which the lighting shaders must execute. The following techniques are designed to reduce the number of pixels on which lighting calculations must be performed, and hence increase performance.

Early z-rejection, stencil masking, and dynamic branching optimizations all have something in common: dependency on locality of data. This really does depend on the hardware architecture, but it is true of most hardware. Generally, for early z-rejection, stencil masking, and dynamic branching to execute as efficiently as possible, all pixels within a small screen area need to behave homogeneously with respect to the given feature. That is, they all need to be z-rejected, stenciled out, or taken down the same dynamic branch together for maximum performance.

19.7.1 Efficient Light Volumes

We use light volume geometry that tightly bounds the actual light volume. Technically, a full screen quad could be rendered for each light and the final image would look the same. However, performance would be dramatically reduced. The fewer pixels the light volume geometry overlaps in screen space, the less often the pixel shader is executed. We use a cone-shaped geometry for spotlights, a sphere for point lights, a box for box lights, and full screen quads only for global lights such as directional lights.

Another approach documented in most deferred shading papers is to adjust the depth test and cull mode based on the locations of the light volume and the camera. This adjustment maximizes early z-rejection. This technique requires using the CPU to determine which depth test and cull mode would most likely yield the most early-z-rejected pixels.

We settled on using a "greater" depth test and "clockwise" winding (that is, inverted winding), which works in every case for us (our light volumes never get clipped by the far clip plane). Educated guesses can quickly pick the most likely best choice of culling mode and depth test. However, our bottlenecks were elsewhere, so we decided not to use any CPU resources trying to optimize performance via this technique.

19.7.2 Stencil Masking

Using the stencil to mask off pixels is another common technique to speed up lighting in a deferred renderer. The basic technique is to use the stencil buffer to mark pixels that a light cannot affect. When rendering the light's volume geometry, one simply sets the stencil test to reject the marked pixels.

We tried several variations of this technique. We found that on average, the performance gains from this method were not great enough to compensate for any additional draw call overhead the technique may generate. We tried performing "cheap" passes prior to the lighting pass to mark the stencil for pixels facing away from the light or out of range of the light. This variation did increase the number of pixels later discarded by the final lighting pass. However, the draw call overhead of DirectX 9.0 along with the execution of the cheap pass seemed to cancel out or even cost more than any performance savings achieved during the final lighting pass (on average).

We do utilize the stencil technique as we render the opaque geometry in the scene to mark pixels that deferred shading will be used to light later. This approach excludes pixels belonging to the sky box or any pixel that we know up front will not or should not have lighting calculations performed on it. This does not require any additional draw calls, so it is essentially free. The lighting pass then discards these marked pixels using stencil testing. This technique can generate significant savings when the sky is predominant across the screen, and just as important, it has no adverse effects on performance, even in the worst case.

The draw call overhead is reduced with DirectX 10. For those readers targeting that platform, it may be worthwhile to explore using cheap passes to discard more pixels. However, using dynamic branches instead of additional passes is probably the better option if targeting Shader Model 3.0 or later.

19.7.3 Dynamic Branching

One of the key features of Shader Model 3.0 hardware is dynamic branching support. Dynamic branching not only increases the programmability of the GPU but, in the right circumstances, can also function as an optimization tool as well.

To use dynamic branching for optimization purposes, follow these two rules:

- Create only one or maybe two dynamic branches that maximize both the amount of skipped code and the frequency at which they are taken.

- Keep locality of data in mind. If a pixel takes a particular branch, be sure the chances of its neighbors taking the same branch are maximized.

With lighting, the best opportunities for using dynamic branching for optimization are to reject a pixel based on its distance from the light source and perhaps its surface normal. If normal maps are in use, the surface normal will be less homogeneous across a surface, which makes it a poor choice for optimization.

19.8 Issues

Deferred shading is not without caveats. From limited channels for storing material attribute information to constraints on hardware memory bandwidth, deferred shading has several problematic issues that must be addressed to utilize the technique.

19.8.1 Alpha-Blended Geometry

The single largest drawback of deferred shading is its inability to handle alpha-blended geometry. Alpha blending is not supported partly because of hardware limitations, but it is also fundamentally not supported by the technique itself as long as we limit ourselves to keeping track of the material attributes of only the nearest pixel. In Tabula Rasa, we solve this the same way everyone to date has: we render translucent geometry using our forward renderer after our deferred renderer has finished rendering the opaque geometry.

To support true alpha blending within a deferred renderer, some sort of deep frame buffer would be needed to keep track of every material fragment that overlapped a given pixel. This is the same mechanism required to solve order-independent transparency. This type of deep buffer is not currently supported by our target hardware.

However, our target hardware can support additive blending (a form of alpha blending) as well as alpha testing while MRTs are active, assuming the render targets use a compatible format. If alpha testing while MRTs are active, the alpha value of color 0 is used for the test. If the fragment fails, none of the render targets gets updated. We do not use alpha testing. Instead, we use the clip command to kill a pixel while our deferred shading MRTs are active. We do this because the alpha channel of render target 0 is used to store other material attribute data and not diffuse alpha. Every pixel rendered within the deferred pipeline is fully opaque, so we choose not to use one of our data channels to store a useless alpha value.

Using the forward renderer for translucent geometry mostly solves the problem. We use our forward renderer for our water and all translucent geometry. The water shader uses our depth texture from our deferred pipeline as an input. However, the actual lighting on the water is done by traditional forward shading techniques. This solution is problematic, however, because it is nearly impossible to match the lighting on the translucent geometry with that on the opaque geometry. Also, many light types and lighting features supported by our deferred renderer are not supported by our forward renderer. This makes matching lighting between the two impossible with our engine.

In Tabula Rasa, there are two main cases in which the discrepancy in lighting really became an issue: hair and flora (ground cover). Both hair and flora look best when rendered using alpha blending. However, it was not acceptable for an avatar to walk into shadow and not have his hair darken. Likewise, it was not acceptable for a field of grass to lack shadows when all other geometry around it had them.

We settled on using alpha testing for hair and flora and not alpha blending. This allowed hair and flora to be lit using deferred shading. The lighting was then consistent across hair and flora. To help minimize the popping of flora, we played around with several techniques. We considered some sort of screen-door transparency, and we even tried actual transparency by rendering the fading in flora with our forward renderer, then switching to the deferred renderer once it became fully opaque. Neither of these was acceptable. We currently are scaling flora up and down to simulate fading in and out.

19.8.2 Memory Bandwidth

Deferred shading significantly increases memory bandwidth utilization on hardware. Instead of writing to a single render target, we render to four of them. This quadruples the number of bytes written. During the lighting pass, we then sample from all of these buffers, increasing the bytes read. Memory bandwidth, or fill rate, is the single largest factor that determines the performance of a deferred shading engine on a given piece of hardware.

The single largest factor under our control to mitigate the memory bandwidth issue is screen resolution. Memory bandwidth is directly proportional to the number of pixels rendered, so 1280x1024 can be as much as 66 percent slower than 1024x768. Performance of deferred shading-based engines is largely tied to the resolution at which they are being rendered.

Checking for independent bit depth support and utilizing reduced bit depth render targets for data that does not need extra precision can help reduce overall memory bandwidth. This was not an option for us, however, because our target hardware does not support that feature. We try to minimize what material attribute data we need to save in render targets and minimize writes and fetches from those targets when possible.

When rendering our lights, we actually are using multiple render targets. We have two MRTs active and use an additive blend. These render targets are accumulation buffers for diffuse and specular light, respectively. At first this might seem to be an odd choice for minimizing bandwidth, because we are writing to two render targets as light shaders execute instead of one. However, overall this choice can actually be more efficient.

The general lighting equation that combines diffuse and specular light for the final fragment color looks like this:

|

Frag lit = Frag unlit x Light diffuse + Light specular· |

This equation is separable with respect to diffuse light and specular light. By keeping diffuse and specular light in separate render targets, we do not have to fetch the unlit fragment color inside of our light shaders. The light shaders compute and output only two colors: diffuse light and specular light; they do not compute anything other than the light interaction with the surface.

If we did not keep the specular light component separate from the diffuse light, the light shaders would have to actually compute the final lit fragment color. This computation requires a fetch of the unlit fragment color and a fetch of any other material attribute that affects its final lit fragment color. Computing this final color in the light shader would also mean we would lose what the actual diffuse and specular light components were; that is, we could not decompose the result of the shader back into the original light components. Having access to the diffuse and specular components in render targets lends itself perfectly to high dynamic range (HDR) or any other postprocess that needs access to "light" within the scene.

After all light shaders have executed, we perform a final full-screen pass that computes the final fragment color. This final post-processing pass is where we compute fog, edge detection and smoothing, and the final fragment color. This approach ensures that each of these operations is performed only once per pixel, minimizing fetches and maximizing texture cache coherency as we fetch material attribute data from our MRTs. Fetching material data from MRTs can be expensive, especially if it is done excessively and the texture cache in hardware is getting thrashed.

Using these light accumulation buffers also lets us easily disable the specular accumulation render target if specular lighting is disabled, saving unnecessary bandwidth. These light accumulation buffers are also great for running post-processing on lighting to increase contrast, compute HDR, or any other similar effect.

19.8.3 Memory Management

In Tabula Rasa, even at a modest 1024x768 resolution, we can consume well over 50 MB of video memory just for render targets used by deferred shading and refraction. This does not include the primary back buffer, vertex buffers, index buffers, or textures. A resolution of 1600x1200 at highest quality settings requires over 100 MB of video memory just for render targets alone.

We utilize four screen-size render targets for our material attribute data when rendering geometry with our material shaders. Our light shaders utilize two screen-size render targets. These render targets can be 32 bits per pixel or 64, depending on quality and graphics settings. Add to this a 2048x2048 32-bit shadow map for the global directional light, plus additional shadow maps that have been created for other lights.

One possible suggestion is to use render targets that are at a lower resolution, and then scale the results up at the end. This has lots of benefits, but we found the image quality poor. We did not pursue this option very far, but perhaps it could be utilized successfully for specific applications.

The amount of video memory used by render targets is only part of the issue. The lifetime and placement of these render targets in video memory have significant impact on performance as well. Even though the actual placement of these textures in video memory is out of our control, we do a couple of things to help the driver out.

We allocate our primary MRT textures back to back and before any other texture. The idea is to allocate these while the most video memory is available so the driver can place them in video memory with minimal fragmentation. We are still at the mercy of the driver, but we try to help it as much as we can.

We use a shadow-map pool and have lights share them. We limit the number of shadow maps available to the engine. Based on light priority, light location, and desired shadow-map size, we dole out the shadow maps to the lights. These shadow maps are not released but kept and reused. This minimizes fragmentation of video memory and reduces driver overhead associated with creating and releasing resources.

Related to this, we also throttle how many shadow maps get rendered (or regenerated) in any one frame. If multiple lights all require their shadow maps to be rebuilt on the same frame, the engine may only rebuild one or two of them that frame, amortizing the cost of rebuilding all of them across multiple frames.

19.9 Results

In Tabula Rasa, we achieved our goals using a deferred shading renderer. We found the scalability and performance of deferred shading acceptable. Some early Shader 3.0 hardware, such as the NVIDIA GeForce 6800 Ultra, is able to hold close to 30 fps with basic settings and medium resolutions. We found that the latest DirectX 10 class hardware, such as the NVIDIA GeForce 8800 and ATI Radeon 2900, is able to run Tabula Rasa extremely well at high resolutions with all settings maxed out.

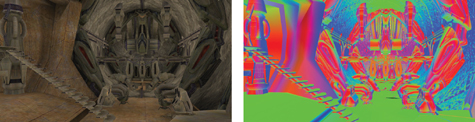

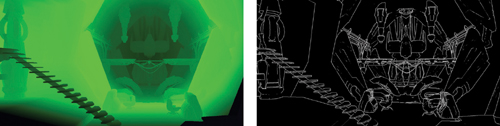

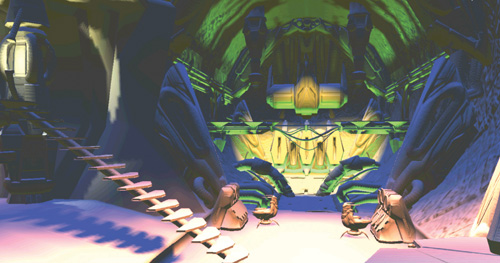

Figures 19-6 through 19-10 show the results we obtained with our approach.

Figure 19-6 An Outdoor Scene with a Global Shadow Map

Figure 19-7 An Indoor Scene with Numerous Static Shadow-Casting Lights

Figure 19-8 Fragment Colors and Normals

Figure 19-9 Depth and Edge Weight Visualization

Figure 19-10 Light Accumulation

- Contributors

- Foreword

- Part I: Geometry

-

- Chapter 1. Generating Complex Procedural Terrains Using the GPU

- Chapter 2. Animated Crowd Rendering

- Chapter 3. DirectX 10 Blend Shapes: Breaking the Limits

- Chapter 4. Next-Generation SpeedTree Rendering

- Chapter 5. Generic Adaptive Mesh Refinement

- Chapter 6. GPU-Generated Procedural Wind Animations for Trees

- Chapter 7. Point-Based Visualization of Metaballs on a GPU

- Part II: Light and Shadows

-

- Chapter 8. Summed-Area Variance Shadow Maps

- Chapter 9. Interactive Cinematic Relighting with Global Illumination

- Chapter 10. Parallel-Split Shadow Maps on Programmable GPUs

- Chapter 11. Efficient and Robust Shadow Volumes Using Hierarchical Occlusion Culling and Geometry Shaders

- Chapter 12. High-Quality Ambient Occlusion

- Chapter 13. Volumetric Light Scattering as a Post-Process

- Part III: Rendering

-

- Chapter 14. Advanced Techniques for Realistic Real-Time Skin Rendering

- Chapter 15. Playable Universal Capture

- Chapter 16. Vegetation Procedural Animation and Shading in Crysis

- Chapter 17. Robust Multiple Specular Reflections and Refractions

- Chapter 18. Relaxed Cone Stepping for Relief Mapping

- Chapter 19. Deferred Shading in Tabula Rasa

- Chapter 20. GPU-Based Importance Sampling

- Part IV: Image Effects

-

- Chapter 21. True Impostors

- Chapter 22. Baking Normal Maps on the GPU

- Chapter 23. High-Speed, Off-Screen Particles

- Chapter 24. The Importance of Being Linear

- Chapter 25. Rendering Vector Art on the GPU

- Chapter 26. Object Detection by Color: Using the GPU for Real-Time Video Image Processing

- Chapter 27. Motion Blur as a Post-Processing Effect

- Chapter 28. Practical Post-Process Depth of Field

- Part V: Physics Simulation

-

- Chapter 29. Real-Time Rigid Body Simulation on GPUs

- Chapter 30. Real-Time Simulation and Rendering of 3D Fluids

- Chapter 31. Fast N-Body Simulation with CUDA

- Chapter 32. Broad-Phase Collision Detection with CUDA

- Chapter 33. LCP Algorithms for Collision Detection Using CUDA

- Chapter 34. Signed Distance Fields Using Single-Pass GPU Scan Conversion of Tetrahedra

- Chapter 35. Fast Virus Signature Matching on the GPU

- Part VI: GPU Computing

-

- Chapter 36. AES Encryption and Decryption on the GPU

- Chapter 37. Efficient Random Number Generation and Application Using CUDA

- Chapter 38. Imaging Earth's Subsurface Using CUDA

- Chapter 39. Parallel Prefix Sum (Scan) with CUDA

- Chapter 40. Incremental Computation of the Gaussian

- Chapter 41. Using the Geometry Shader for Compact and Variable-Length GPU Feedback

- Preface